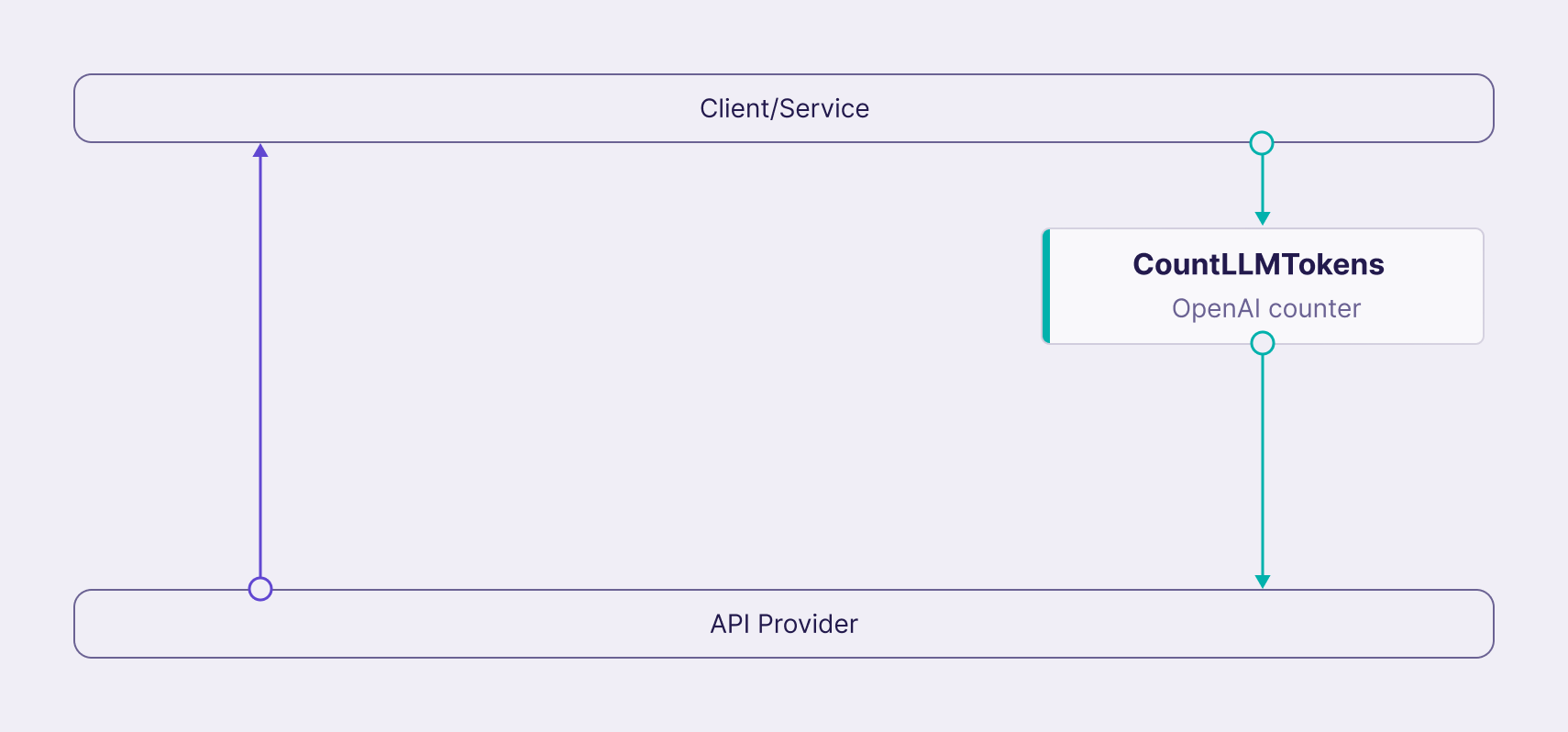

Count LLM Tokens flow

The "Count LLM Tokens" processor in Lunar.dev provides an estimation of the number of tokens required for a given request body, based on a specified Language Learning Model (LLM). This estimation is performed before the request is sent to the upstream LLM provider. The estimated token count is then added as a custom header, x-lunar-estimated-tokens, to the request, which is removed by Lunar.dev before the request is actually forwarded to the LLM provider. This mechanism allows users to leverage this token estimation for internal logic within Lunar.dev, most notably in conjunction with the Custom Quota feature, enabling precise control over LLM usage based on token consumption.

Scenarios

This feature allows users to implement fine-grained control over their LLM usage, leading to better cost management and adherence to provider limits. Here are a few scenarios illustrating its value:

Use Case 1: Preventing Overspending on LLM Costs

-

User Story: As a developer managing an application heavily reliant on a pay-per-token LLM, I want to prevent unexpected cost overruns. I want to define a maximum token limit per request, so that Lunar.dev automatically blocks requests exceeding this limit before they are sent to the LLM provider.

-

How the Flow Helps: The "Count LLM Tokens" processor estimates the tokens. A Custom Quota rule can then be configured to check the

x-lunar-estimated-tokensheader. If the estimated token count exceeds the defined limit, the request is rejected by Lunar.dev, preventing unnecessary LLM costs.

Use Case 2: Fair Usage and Prioritization

-

User Story: As a product owner, I want to ensure fair access to our LLM-powered features for all users and prevent a single user from consuming an excessive number of tokens. I want to implement a quota system that limits the total number of tokens a user can consume within a specific time window.

-

How the Flow Helps: By estimating tokens with this processor, and then using a Custom Quota based on the

x-lunar-estimated-tokensheader, it's possible to track and limit the total estimated tokens consumed by a user within a defined period. This allows for a more granular quota management than simply limiting the number of requests.

Use Case 3: Optimizing for Rate Limits based on Token Count

-

User Story: As an engineer integrating with an LLM provider that has different rate limits based on the number of tokens per request, I want to ensure our application stays within these limits to avoid throttling.

-

How the Flow Helps: The "Count LLM Tokens" processor allows for setting up rate limiting rules based on the estimated token count. For example, you could configure a more restrictive rate limit for requests with a higher estimated token count and a more lenient one for smaller requests, effectively optimizing usage according to the provider's rate limiting policies.

Use Case 4: Implementing Tiered Access Based on Token Consumption

-

User Story: As a business, we offer different service tiers with varying levels of LLM access. Higher tiers should allow for processing larger and more complex requests, which translate to higher token counts.

-

How the Flow Helps: By using the estimated token count from the header, different routing or processing logic can be applied based on the request size. For example, requests with estimated tokens below a certain threshold can be routed to a less expensive LLM model or a different processing pipeline.

In summary, the "Count LLM Tokens" flow provides a powerful mechanism to understand and control the token consumption of your LLM interactions within Lunar.dev, primarily enabling more sophisticated Custom Quota management and cost optimization.

Flow Components

CountLLMTokens Processor Custom Quota

Flow Example

name: CountRequestTokens

filter:

url: api.openai.com/v1/chat/completions

body:

- key: model

regex_match: "gpt-4-*"

processors:

CountLLMTokens:

processor: CountLLMTokens

parameters:

- key: store_count_header

value: "x-lunar-estimated-tokens"

- key: model

value: "gpt-4-*"

flow:

request:

- from:

stream:

name: globalStream

at: start

to:

processor:

name: CountLLMTokens

- from:

processor:

name: CountLLMTokens

to:

stream:

name: globalStream

at: end

response:

- from:

stream:

name: globalStream

at: start

to:

stream:

name: globalStream

at: end

quotas:

- id: MyQuota

filter:

url: api.openai.com

strategy:

fixed_window_custom_quota:

static:

max: 100

interval: 1

interval_unit: minute